Bernard Kintzing,

SEO Basics: Beginner's Guide to SEO

What is SE0?

SEO stands for Search Engine Optimization, which is a set of best practices that improve the appearance and ranking of a website in search results. In short, it's the process of tailoring a website to the peculiarities of search engines. Improving SEO leads to an increase in the quality and quantity of traffic to a website or a web page from search engines.It is important to note that SEO targets unpaid traffic and not direct or paid traffic.

It is important to note that SEO targets unpaid traffic and not direct or paid traffic.

Quality of Traffic: this is how relevant the users and their search queries are to the content on your website. High-quality traffic includes only visitors who are genuinely interested in the products, services, or content on your website. The job of a search engine is to match the content of the SERP (Search Engine Results Page) with the user's intent. Proper website SEO helps the search engine understand the contents of your page.

Quantity of Traffic: the number of users who visit your site through organic search results. A user is more likely to click on a website at the top of the SERP.

Unpaid Traffic: visitors who land on your website by natural means. Unpaid traffic is also known as organic traffic. Organic traffic is produced through proper SEO and is the sum of all visits to your websites generated through natural or unpaid methods.

Direct Traffic: visitors who entered your site address in the browser search window or entered the saved tab. Also known as direct access, which occurs when a visitor arrives directly on a website, without having clicked on a link on another site

Paid Traffic: visitors who accessed your website through a paid source. This web traffic comes from Google Ads, Facebook Ads, or any other number of paid advertisements.

How does SEO work?

Search engines such as Google, Yahoo, or Bing use bots or spiders to crawl websites. Google's bot is playfully named Googlebot. Web crawlers start at known web pages and follow internal and external links on the page. The web crawler learns from the page's content and the links to understand what purpose the page serves. The collected information is stored in a database and is called an index. Google's current index is called Caffeine.When a user enters a search query, the search engine uses the context information collected in its database to display the most accurate and relevant information. The algorithm used by the search engine is extremely complex with many factors that are constantly evolving.

How to implement SEO?

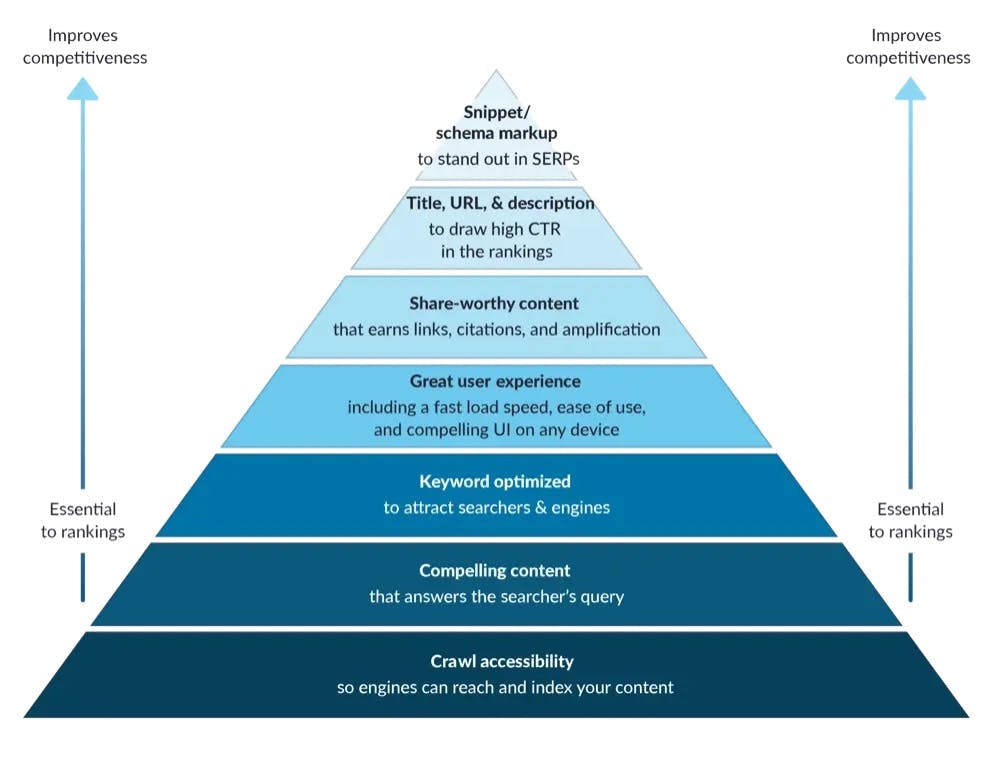

SEO is a complex set of guidelines that can change in small ways each year, as the search engines change how they weigh each aspect of the site. The founder of moz.com created a pyramid hierarchy of each element in SEO.

At the base of the pyramid are the foundations of SEO and the most critical components to ensure a good ranking on the SERP. Moving up the pyramid are the aspects that help your site stand out on the SERP among sites with similar ranking. Following the seven tiers leads to a search engine optimized website.

Crawl Accessibility

If you want your site to rank highly on the SERP, it needs to be friendly for the web crawlers. A bot-friendly website makes it easy for search engines to discover its content and make it available to users. A web crawler is trying to complete several basic tasks:

Discover pages through links

Reach a page from primary entry points, i.e. the home page

Learn the contents of the page

Find links to other pages and repeat the process

The first step is to see if your site can be found by Google. The easiest way is to search for site:{sitename}.

The "About XXX results" can give you an idea of how many pages are accessible to the Googlebot web crawler. To get an accurate and detailed report you can sign up for a free Google Search Console account. If your important pages are visible in the SERP you can continue to the next step. If your pages are not showing up, make sure you are not blocking the crawler from accessing each page. The crawler can be blocked through several different means:

The meta tag's robots property on an individual page. Or the x-robots tags in the page header, particularly for URLs that are not HTML pages where you can not add meta tags. Follow the Google Search Central guidelines when working with the meta tag or x-robots tag.

<meta name=robots content="index, follow"><meta name=googlebot content="index, follow, max-snippet:-1, max-image-preview:large, max-video-preview:-1"><meta name=bingbot content="index, follow, max-snippet:-1, max-image-preview:large, max-video-preview:-1">The robots.txt file for the website. The robots.txt file tells the web crawler what pages can and can't be indexed, how often to index, and other useful access guidelines. You can learn more about the robots.txt file or go straight into creating the file.

User-agent: *

Sitemap: https://example.com/sitemap.xml

User-agent: *

Disallow: /*.pdf$

User-agent: *

Disallow: /admin/

User-agent: *

Crawl-delay: 10Compelling Content

At the end of the day, one of the most important parts of SEO is providing users with the content they are interested in. Providing meaningful content is becoming increasingly more important as the Google search engine algorithm becomes more sophisticated. While following SEO guidelines is important, your web content exists to serve a purpose for the reader. Content should not be created for the purpose of higher search ranking. Higher ranking is a means to attract users, if the content does not retain the user it is a pointless effort.So how do you write a piece of content that is provides value to a user and follows search engine optimization guidelines? When beginning to write content for a new page there are several guidelines to follow to help rank higher in the SERP.

Write a piece of content centered around a keyword. To understand how to choose the right keyword, visit the keyword optimization section.

Focus on publishing complete content. Complete content covers an entire topic and fully answers the users questions or serves their needs. Pages with complete content are ranked higher on the SERP.

Use visual content. Adding visual content to your content helps by keeping visitors on your page longer. Visual content is more digestible and engaging, resulting in an increase in average dwell time and reduces bounce rates.

Front-load your titles with the targeted keyword. Google puts a little more weight on terms that show up early so it is important to make sure your keyword is one of these terms.

Use synonyms and related terms of your keyword. If Google sees both your main keyword and related keywords on your page, the algorithm is more likely to flag the page as a comprehensive piece of content.

Early versions of the google search engine could not understand semantics the way it does today. This used to mean that if your site ranked high under "jacket" it would not rank under the keyword "coat". Several tactics addressed this issue, such as creating duplicate content for each keyword. Because of these practices, large amounts of low-quality content started appearing. Google addressed this issue in a 2011 update called Panda penalizing low-quality pages. Google has made it clear that it prioritizes quality content. To avoid unnecessary penalties it is important to make sure you are not following outdated tactics.

Thin Content: Broadly defined as website content that either provides very little value to the user or is not relevant. Google describes thin content as “low-quality or shallow pages on your site". It is important to note that content extends beyond text to include images, headers, videos, and basically all elements of the page.

Duplicate Content: As it sounds duplicate content is content shared across multiple domains. Google does not penalize duplicate content, however Google does filter duplicate versions from the SERP and only shows the original source.

Cloaking: The practice of presenting different content or URLs to human users and search engines. Cloaking is considered a violation of Google's Webmaster Guidelines because it provides our users with different results than they expected.

Keyword Stuffing: Repeating your targeted keyword X times does not increase your search ranking by X. In fact it can actually incur penalties from the search engine. Google provides the following examples

Lists of phone numbers without substantial added value

Blocks of text that list cities and states that a webpage is trying to rank for

Repeating the same words or phrases so often that it sounds unnatural, for example: We sell custom cigar humidors. Our custom cigar humidors are handmade. If you're thinking of buying a custom cigar humidor, please contact our custom cigar humidor specialists at custom.cigar.humidors@example.com.

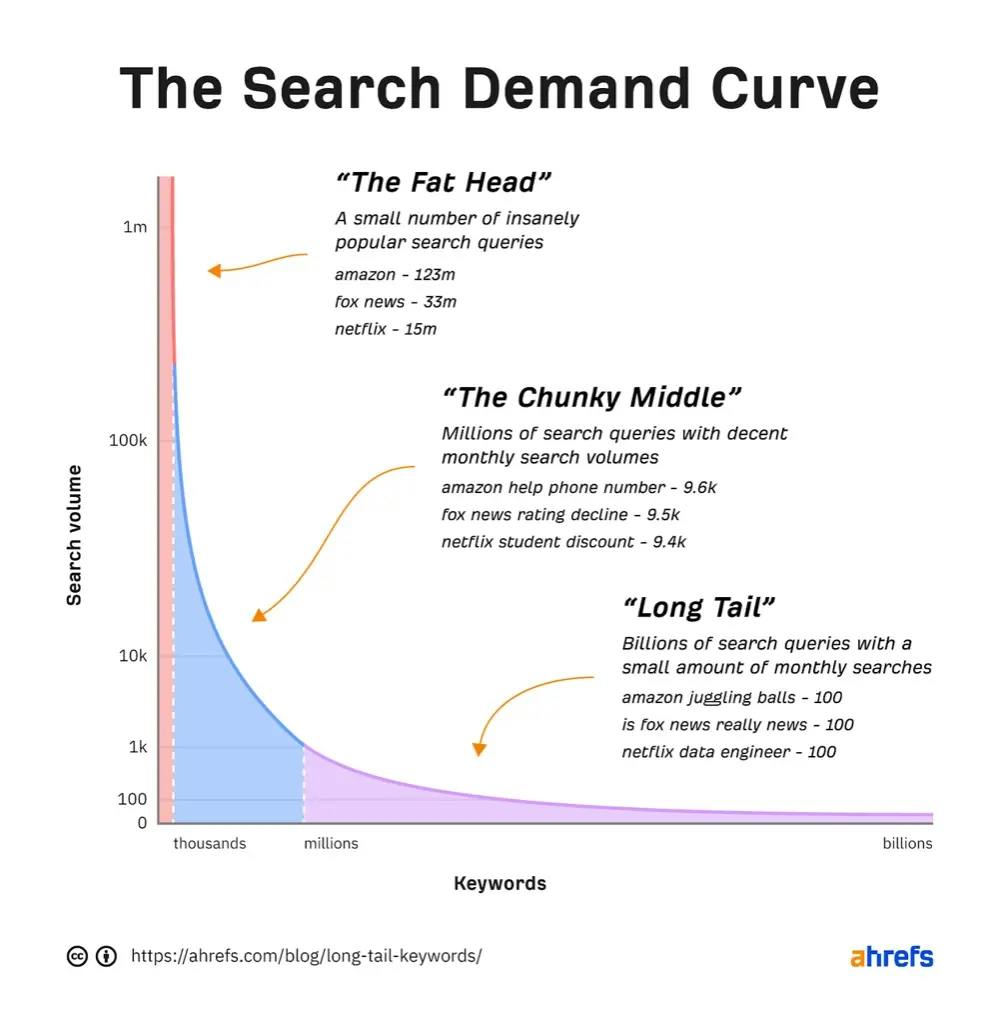

Keyword Optimization

If you want to rank first for a keyword, it is crucial to understand that it will be impossible (very very difficult) to rank high in a competitive keyword. To achieve success, you need to discover untapped non-competitive long tail keywords. More information on long tail keywords here.

Create list of keywords: Creating a list of keywords helps your content stays focused. If you are having trouble, several online tools can help:

Keyworddit searches through popular subreddits to identify relevant keywords

Exploding Topics discovers long tail keywords and identifies topics before they reach mainstream popularity

Keyword Tool generates a list of keywords based on a provided seed. While helpful, the results should serve more as assistance than a means to an end.

Find the best keywords from the list: Filtering out competitive and irrelevant keywords to target the keyword with the highest potential for success.

Do a Google search for the keyword, if there are lots of authority sites (i.e. popular sites, Wikipedia, Apple) then it is a bad keyword to rank under. For an accurate number on the difficulty of a keyword you can use ranking tools: ahrefs, semrush, or moz.

Look at market fit and determine if these are these keywords your customers actually search. Don't use product and service keywords (i.e. laptop, car, bakery), instead target terms that your target customers search for when they are not searching for what you sell. If you sell personal training packages target "how to get in shape" instead of "personal training". Informational keywords have higher rates of returns.

User Experience

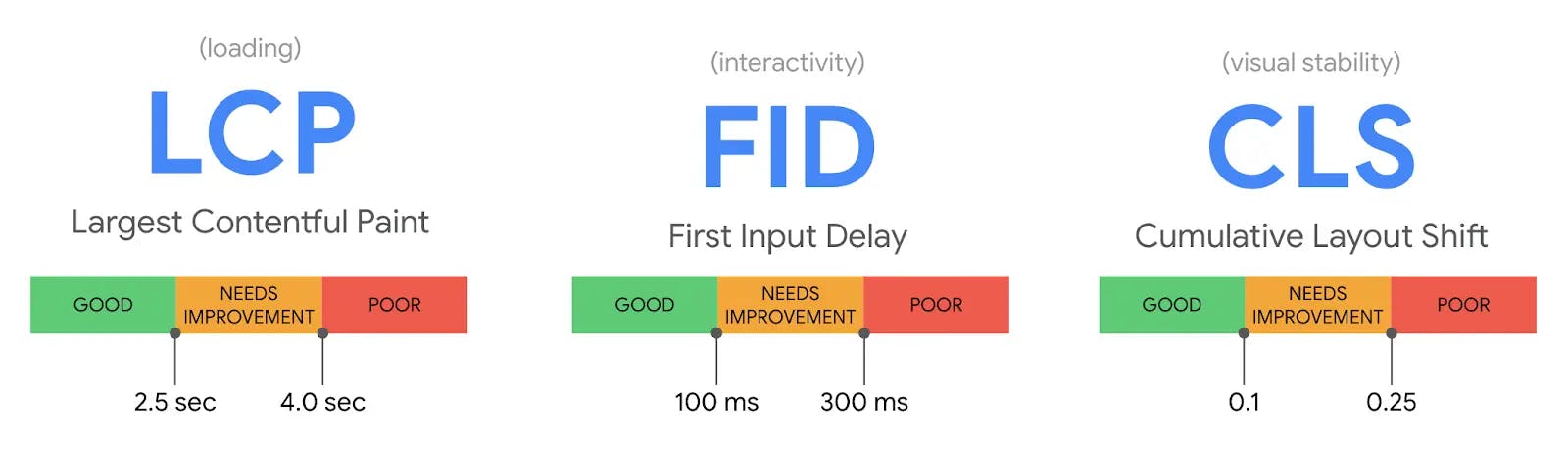

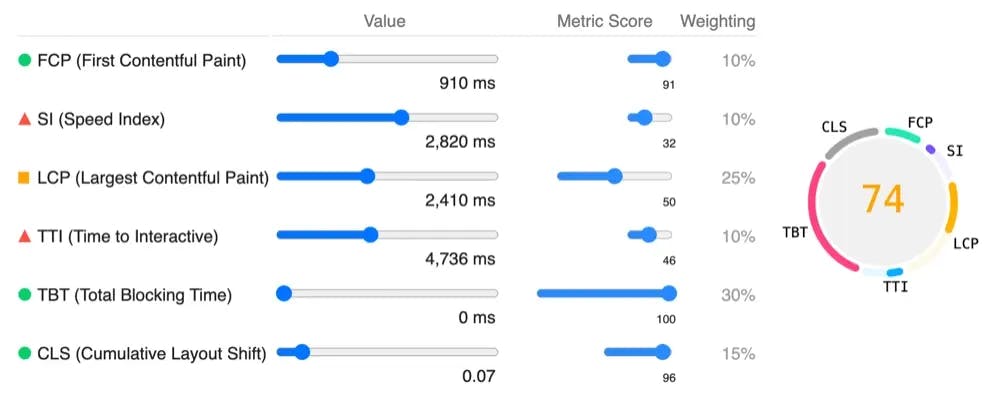

Google has outlined the three top metrics used by the search engine to evaluate user experience. User expectations can vary depending on the site or context, however, regardless of these deviations, there are several constants expected. These standards of loading, interactivity, and visual stability are referred to as the Core Metrics.The quality of each standard is tested with equivalent metrics provided by Google.

Largest Contentful Paint (LCP): Measures the time it takes the website to show the user the largest content on the screen. For the user this is the perceived loading time, i.e. when the site appears fully loaded.

First Input Delay (FID): Measures the time from when the user first interacts with your site to (i.e. click a link, tap a button, etc...) to when the browser is actually able to respond to interaction. Delays are caused when the browser is busy downloading assets, parsing scripts, or executing blocking operations

Cumulative Layout Shift (CLS): Measures the unexpected shifting of webpage elements while the page is still downloading. Layout shift can be caused by a large image or video that is dynamically inserted into the DOM pushing content down.

There are detailed articles from web.dev covering the core metrics: Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS). Google provides several techniques for improving site performance.

There are several ways to test the core metrics for your site. The one I recommend is Google Lighthouse through the Chrome dev tools. To understand how Lighthouse generates a score, you can use the Lighthouse Scoring Calculator.

There is a direct correlation between website load time and responsiveness and how long a user stays on the site. In 2019, Backlinko conducted a study looking ar five million desktop and mobile pages. They found that the average time to fully load a webpage was 10.3 seconds on desktop and 27.3 seconds on mobile. These findings support a 2017 analysis by Google that found that the average load time for a mobile landing page is 22 seconds, despite 53% of visitors leaving a page that takes longer than three seconds to load.

Several key findings from the Backlinko study and Google analysis:

As load time increases from 1s...

to 3s the probability of bounce increases by 32%.

to 5s the probability of bounce increases by 90%.

to 6s the probability of bounce increases by 106%.

to 10s the probability of bounce increases by 123%.

The average web page takes 87.84% longer to load on mobile vs. desktop

When comparing major CMSs Squarespace and Weebly have the best performace, Wix and WordPress have the worst performance.

When comparing time to first byte (TTFB) of web hosts GitHub and Weebly have the quickest TTFB, Siteground and Wix are the slowest.

One of the easiest optimizations to implement when looking to reduce website load times is minifying. Minification is the process of removing all unnecessary characters from the source code of interpreted programming languages or markup languages without changing its functionality. A free and easy tool to use is an online CSS & JS Minifier.

In addition to the core web vitals, there are other optimizations you can make to help the search engines understand the content on your website.

Header Tags

Header tags are HTML elements ranging from H1 to H6 used to denote headings on your website. The H1 tag, is reserved as a unique title for each page. The H1 should contain descriptive text front-loaded with your target keyword. The following tags H2-H6 are for subheading and should follow the numeric hierarchy. Many SEO webmaster tools mark multiple H1 tags as a serious on-page issue. However, Google's John Mueller has confirmed that the Search Engine Algorithm used by Google doesn;t have any issues with a page having multiple H1 tags. He also added that multiple H1 tags is perfectly fine if the users find benefit from having it on a page.

A travel guide for Montana might follow a similar layout:

<h1>Montana Travel Guide</h1>

<h2>Cities to Visit</h2>

<h3>Helena</h3>

<h3>Missoula</h3>

<h3>Bozeman</h3>Links

As mentioned above links are crucial to helping web crawlers index pages. Links requiring a click to access (dropdown menus) are often invisible to crawlers. If all your internal links exist in the menu, the crawler might not access any of them. In addition, try and incorporate links directly on the page.

The anchor text of a link denotes to the browser the content of the destination page. This can be a great place to add the target keyword as long as you are not keyword stuffing. Below is an example of how to add anchor text in HTML.

<Link href="example.com"></Link>

<Link href="example.com">Example Website</Link>In the same way, we avoid keyword stuffing we also need to avoid link stuffing. Google's Webmasters Guidelines say to "limit the number of links on a page to a reasonable number (a few thousand at most)." Each link passes value and authority from one page to another, referred to as link equity. Link equity is calculated using several factors:

The relevancy of the link. Links from a baking page to a sports page are most likely not relevant.

Authority of the origin site. Links for a site/page of authority boost the authority of the destination page. This can be used internally by linking from older authoritative pages to new pages.

The links traffic. How often the link is clicked and followed adds to the validity of the link.

The accessibility of the link. If the link is blocked by the robots.txt, meta tags, or is hidden in a menu it will not get a value from Google.

The location of the link. Links located in the footer or the sidebar are not given as much weight as links located in the main body of the page.

The number of links on the page. A page only has so much authority it can give, and it is split between all the links among the page. While there is no clear rule about the max number of links allowed on a page, a balance should be kept between quality and quantity of links.

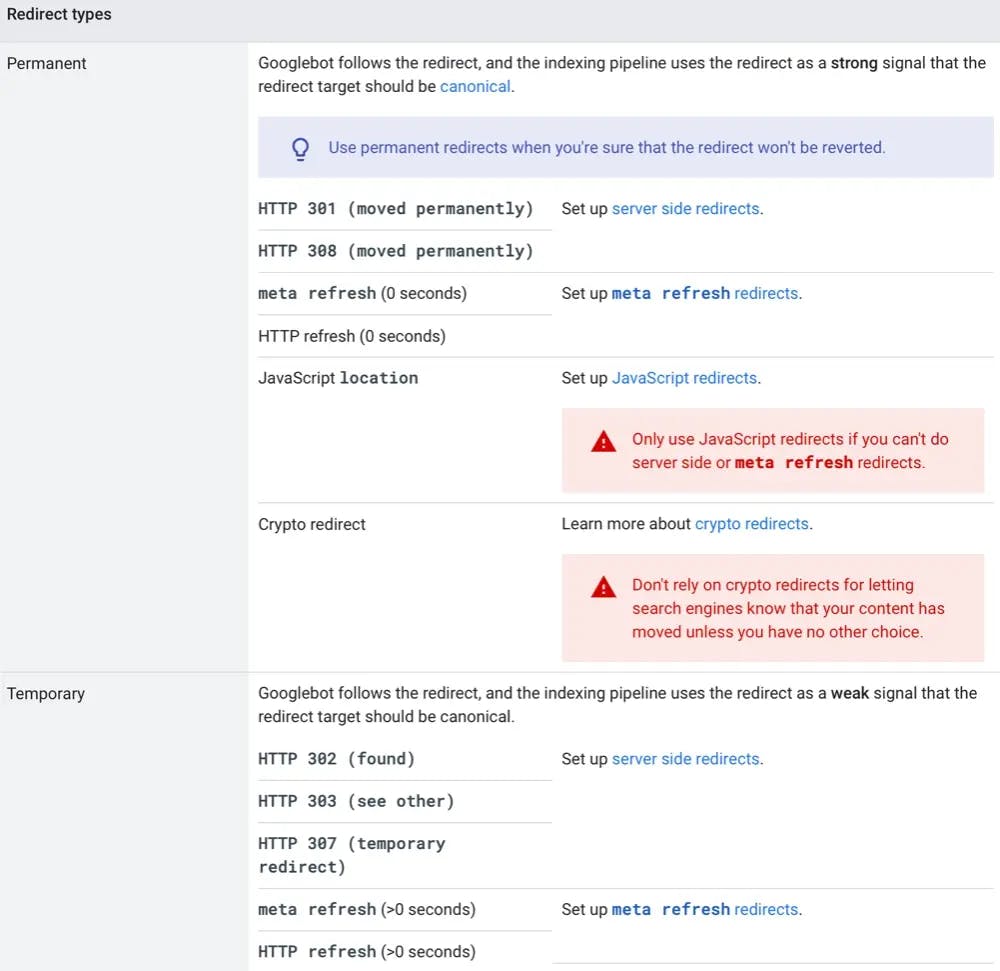

The HTTP status of the link. Links that return a status of 200 do not have a reduction in authority. 301 and 302 redirects may or may not have a negative impact. More on redirects in the section below.

Redirects

If a page is moving URLs it is best practice to implement a server-side 301-page redirect. A URL change could happen for several reasons.

Moved site to new domain

People access the site through different URLs

You are merging two websites

A page was removed and users need to get sent to a new page

Usually speaking a user will not be able to tell the difference between the different types of redirects. However, choosing the correct redirect code is important in communication to the Google Search Engine. You can read more in the Google Search Console directions. According to Google, there is no penalty in PageRank for redirects. PageRank is one of the multiple ranking signals used, and we do not know how the other signals handle redirects. If a URL changes, it is best practice to update any links to the new destination.

Images

Images are an essential component of websites, are great for SEO, and can be the largest culprit of slow load times. The simple solution to long load times is image compression. For best results, use image formats and resolutions that are appropriate for their use. There is no reason to use a color-accurate 4k JPEG image for a thumbnail. For the best practices follow the Google guidelines.

If you need to convert a images to WebP there is a free online tool.

After you have selected the images you want on your site, you need to write the alt descriptions. The alt text is short piece of text intended to describe the image that also serves several important purposes:

Images can be found by search engines: since search engines can not actually see pictures (not yet) they read the alt text for the image.

Webpage will have better SEO: having keywords in your alt description can lead to higher SERP rankings.

Webpage will be more accessible: Visitors who have visual impairments rely on screen readers to navigate a website. Screen readers use the alt text to describe images.

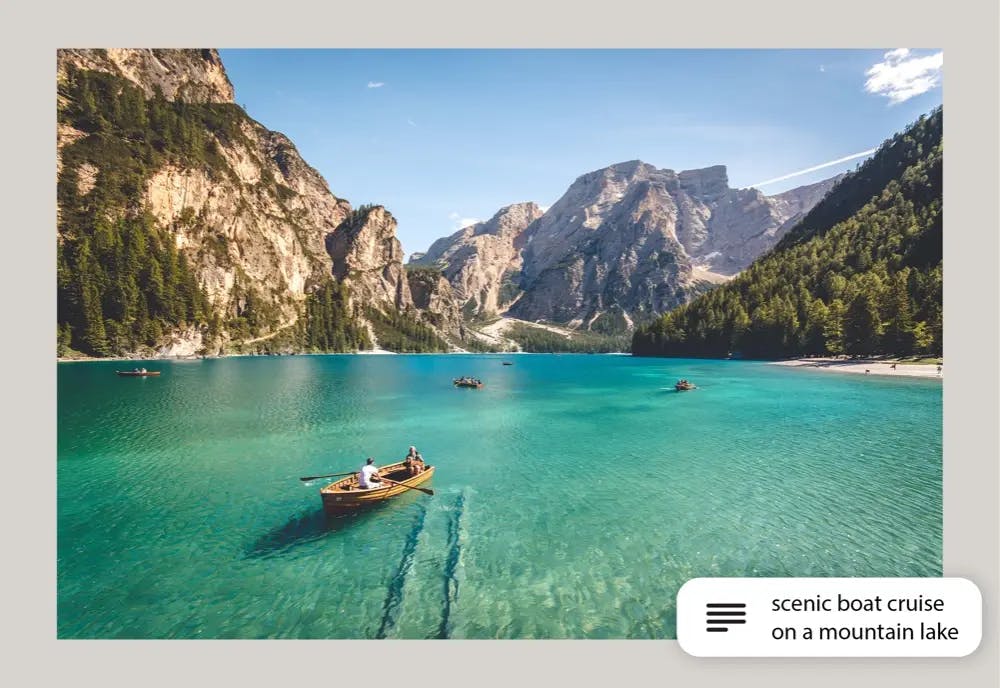

There is no one size fits all template for writing alt text, it depends on the goal you have with your image. Using the image below there are several different alt descriptions we can write. They change depending on if it's being used in a travel guide, or if it was a portfolio piece for a photographer.

<!-- bad alt description -->

<img src="boats-on-lake.jpg" alt="boats on lake, mountains, people">

<!-- travel guide alt description -->

<img src="boats-on-lake.jpg" alt="scenic boat cruise on a mountain lake">

<!-- photographer portfolio alt description -->

<img src="boats-on-lake.jpg" alt="beautiful lake and mountain landscape photograph">The alt description is a great place to add your target keyword as long as you can also accurately describe the image. It is recommended to keep your alt text under 125 characters in length otherwise search engines might not fully read it.

Share-worthy Content (Backlinks)

Backlinks are one of the top search ranking factors used by Google. Increasing your number of backlinks helps to increase your SERP ranking. Consequentially backlinks are one of the hardest parts of SEO and can take the greatest time commitment. There is a great tool by ahrefs to view the number of backlinks a website has.When trying to get backlinks to your site there are several methods that have show relative success

Guest Blogging: Writing one-off posts for another website.

Fixing Broken Links: Find links that are broken (free tool) and ask the owner to update to a working URL.

Skyscraper Technique: Find content relevant to your website with lots of links, make a better piece of content, and ask those linking to to link to you instead.

Unlinked Mentions: Find mentions of your brand that are unlinked and ask the owner to properly link the mention.

Formatting

A great piece of content without proper formatting is difficult for a user to read. To make it as easy as possible, break up your text with headings, paragraph breaks, and graphics. Utilizing bullet points, bold, and italics when possible allows the reader to skim and find what they are looking for. Beyond ease of use for the reader, proper formatting increases your chance of being featured in a snippet (position 0). Finally, always make sure to follow ADA accessibility guidelines. If you have questions reference the GoogleWeb Fundamentals or the ADA Toolkit.

URL, Title, & Description

The URL, Title, and Description are the parts of a website that help describe to the user the content of a page before they visit.

URL

The URL of your website shows the locations or addresses for individual pieces of content on the web. Like with the rest of your website it is necessary to structure it properly.

Page Names: Each page on your site has a unique URL, used by the browser to find the correct content. Making your URLs clear does not help the browser, but rather makes a searcher more likely to click on a link. A user will feel more comfortable clicking on a link that reinforces the content of the webpage. The URL is a minor part of the page ranking but users wont click on a link they don't trust, even when ranked highly.

<!-- bad url -->

example.com/ljsdjn/article?a=h74k-s6hc-90jk

<!-- trustworthy url -->

example.com/articles/seo-basicsPage Organization: Structuring your pages in a way that makes sense by grouping related content can send signals about the type and topic of content.

<!-- topic pages group by category-->

example.com/recipes/apple-pie example.com/recipes/banana-bread example.com/authors/john-james example.com/authors/mary-moore

<!-- time sensitive pages grouped by date -->

example.com/2020/december/best-of-2020 example.com/2013/february/upcoming-trends-to-watchURL Length: Keeping your URLs short will prevent them from being cut off when displayed. Again this is not a factor that directly affects search algorithms, rather users are more likely to click on a shorter URL.

URL Keywords: As long as it's not forced the URL is a great place to your target keywords.

Hyphens: Separating words in the URL add to the readability and trust factor. Using hyphens is the only reliable character to separate words in a URL.

<!-- non separated url -->

example.com/articles/howtooptimizeforseo

<!-- hyphen separated url -->

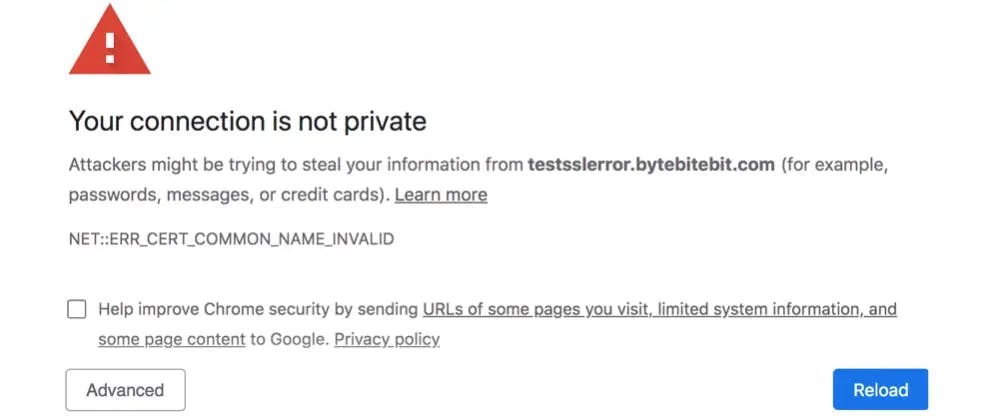

example.com/articles/how-to-optimize-for-seoProtocols: A protocol is either HTTP or HTTPS that precedes a URL. Google recommends that all website traffic is served over HTTPS, the extra s standing for secure. To use HTTPS you must get a SSL certificate which is used to encrypt the data being sent between the web browser and the server. If your site is not secure users will be greeted with a warning message before they can access the site.

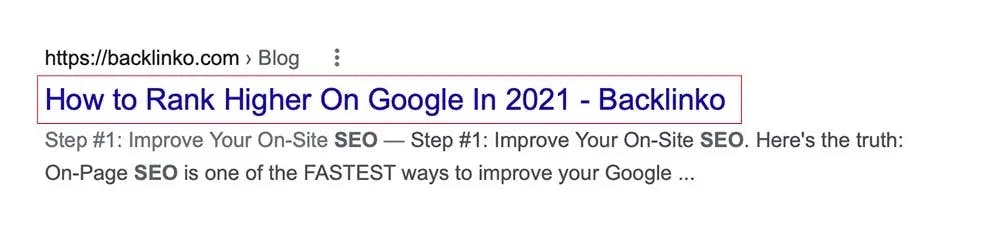

Title

The title is an HTML tag that specifies the title for a specific page. Each page on your website should have a unique title. The title is visible on the SERP, in the browser tab, and when a page is shared.

<head> <title>Example Title</title> </head>On most pages, specifically blog posts the title tag should be the same as the H1 tag. When writing the title tag you also follow similar guidelines.

Front-load your titles with the targeted keyword. Google puts a little more weight on terms that show up early so it is important to make sure your keyword is one of these terms.

Add Branding. Adding your companies name to the end of the title will help promote brand awareness, and create a higher click through rate with people familiar with your brand. In some places such as the home page it could even make sense to add your brand to the front of the title text.

Limit Length.Only the first 50-60 characters will be shown in the SERP, if your title tag is longer ellipsis will be added at the end. Keeping your title short is important, but do not sacrifice the quality to try and stay under the 60 char limit.

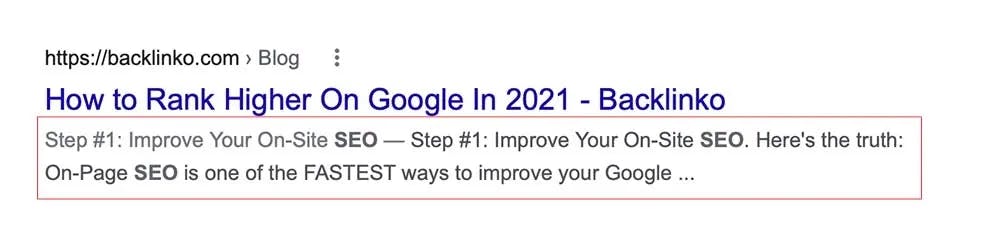

Description

The description is another HTML tag displayed right after the title in the SERP and when the page is shared. Google says that that meta descriptions are not a ranking factor, but like the title it is incredibly important for the click through rate. Its job is to provide a more in-depth description of the page though google may trim the provided description to show only what is most relevant based on the user's query.

<head> <meta name="description">Example Description</meta> </head>Relevance: The meta description should be highly specific and it should summarize the key concepts. The searcher should get enough information to know that they have found a relevant page without spoiling what your page has to offer.

Length: Search engines limit the meta descriptions to about 155 characters. It's best practice to write meta descriptions that range between 150-300 characters. Some positions in the SERP give extra space, typically when following a featured snippet. If unsure try and keep description to around 150 characters.

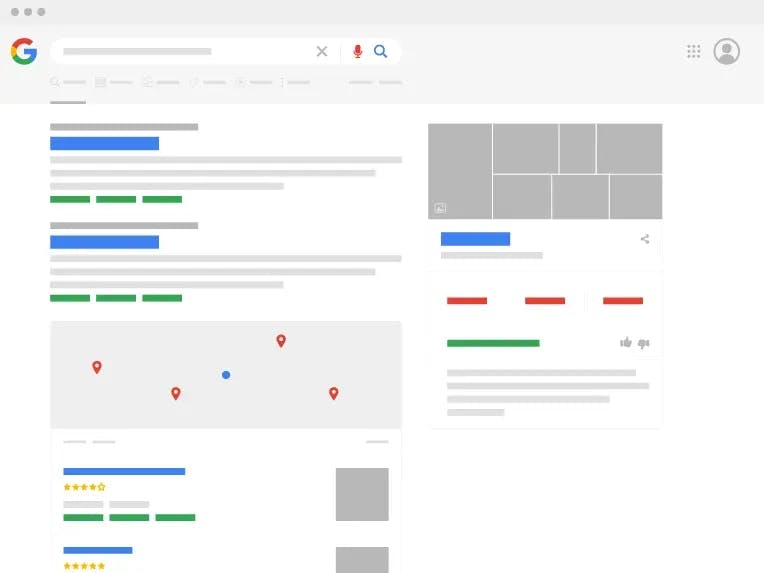

Snippet & Schema Markup

Snippets also referred to as SERP features are elements on the Google search results page that go beyond the standard ten blue results. SERP features are not part of the organic search results and fall into four main categories:

Rich snippets add a visual layer on top of the standard search result, these can include ratings, breadcrumbs, FAQ, site links, etc...

Paid results are bought by bidding on keywords though AdWords or Google Shopping.

Universal results are shown in addition to the organic search results and can take the form of image results, news results, or featured snippets.

Knowledge graph data which appears in panels or boxes. These can be information on a dog breed, a celebrity, or the current weather.

To make it into a SERP feature you need to enable your page to support structured data. When a web crawler scans a recipe blog, it can not understand the author, recipe, and ingredients. To solve this schema markup is used. This allows you to directly tell the crawler the information on the page. The current preferred schema method is JSON-LD, though there are thousands to choose from.

If you want to markup your web pages to support snippets, the first step is to understand structured data, what it is, and how it works. From there you can start adding structured data to your pages, all the while making sure to follow the data guidelines.

Additional Resources

If you want to read more on the seven steps listed above you can read the Moz beginners guide.

For the latest on SEO from google read the documentation.

Free SEO tools from ahrefs.